In recent years, AI training clusters have become the most demanding battlefield for high-speed interconnects. As model parameters scale from billions to trillions, bandwidth requirements rise sharply. From the outside, it may seem logical that 1.6T should quickly replace 800G.

Yet in real AI training clusters, 800G remains the mainstream choice — and this is not a technology lag, but a rational engineering decision.

AI Training Clusters Prioritize Balance, Not Just Peak Speed

In an AI training cluster, network performance is not defined by a single link speed. It is defined by system balance: compute, memory, switching capacity, power, cooling, and cost.

Today’s AI training cluster architectures are already well-aligned with 800G. GPU nodes, leaf–spine fabrics, and optical interconnects are designed around 800G lanes, enabling predictable performance scaling. Moving directly to 1.6T often disrupts this balance rather than improving it.

800G Offers the Best Bandwidth-to-Maturity Ratio

From a deployment perspective, 800G sits at a sweet spot:

Ecosystem maturity: DSPs, optical engines, connectors, and testing standards for 800G are well established.

Manufacturing yield: Compared with 1.6T, 800G modules deliver higher yield and better consistency.

Interoperability: AI training clusters require massive port counts, and 800G integrates smoothly with existing switching silicon.

In contrast, 1.6T is still in an early adoption phase. While technically impressive, it introduces higher risk in large-scale AI training cluster rollouts.

Power and Thermal Reality Favors 800G

Power efficiency is a silent constraint in every AI training cluster.

A 1.6T optical module does not simply double bandwidth — it often increases power density disproportionately. This creates challenges in airflow design, thermal budgets, and rack-level planning.

800G, by comparison, delivers a more controllable power profile, making it easier to scale AI training clusters without redesigning cooling infrastructure.

Network Topology Still Matches 800G

Most AI training clusters today rely on Clos or Dragonfly+ topologies optimized for 800G lane aggregation. Switching to 1.6T would require:

New switch ASIC generations

Higher-risk optical packaging

Revalidation of loss budgets and fiber management

For many operators, upgrading 800G density is simply more efficient than rushing into 1.6T.

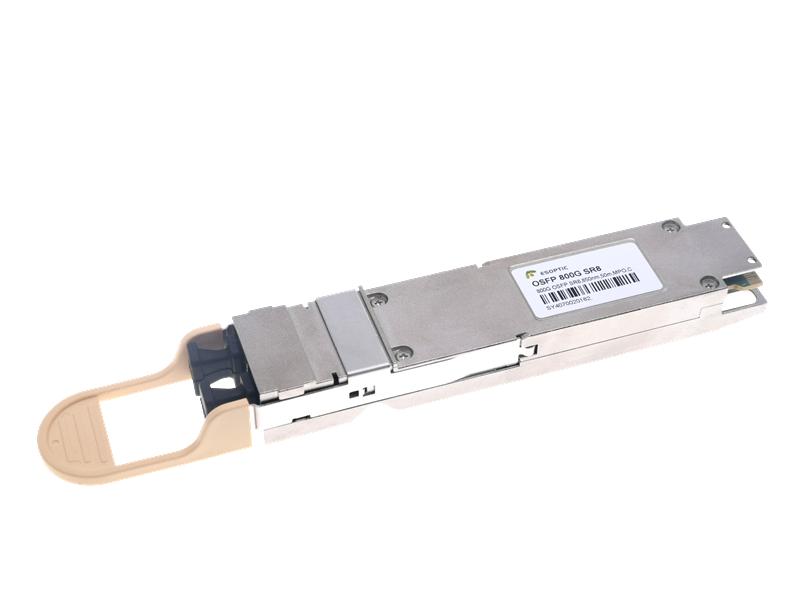

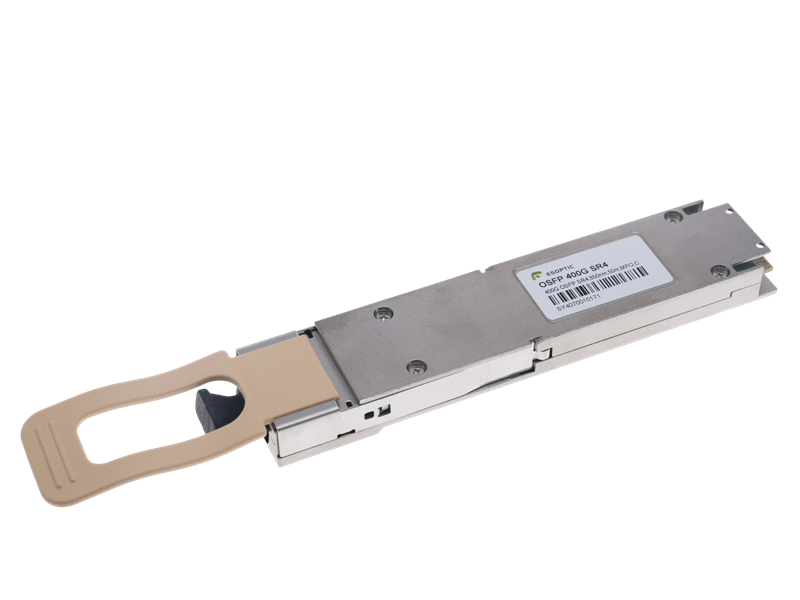

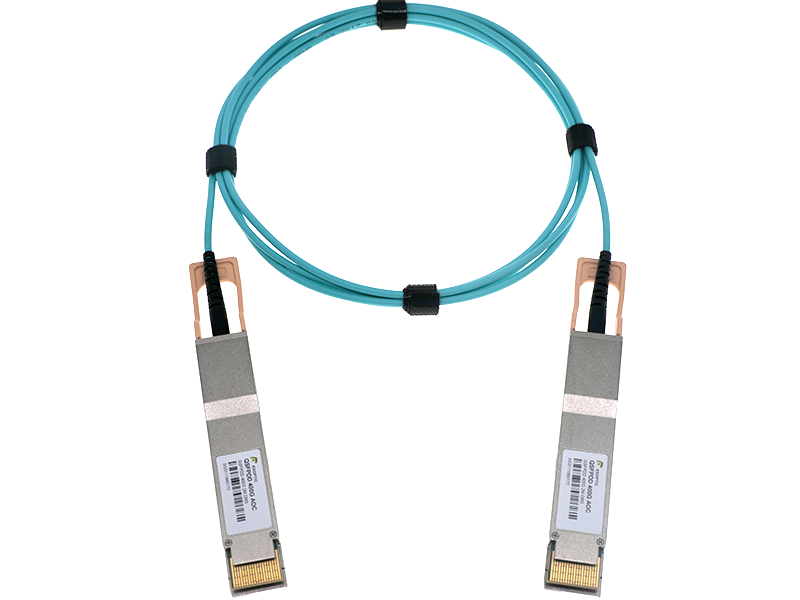

Where ESOPTIC Fits into the 800G Reality

At ESOPTIC, we see firsthand how customers design AI training clusters in real production environments. Our 800G optical modules, AOC, and DAC solutions are built to support high-density, high-stability deployments — exactly what AI training clusters demand today.

Rather than chasing specifications alone, ESOPTIC focuses on deployable performance, reliability, and lifecycle stability, which is why 800G continues to dominate real-world AI training clusters.

Will 1.6T Replace 800G? Yes — But Not Yet

1.6T will absolutely have its moment, especially for next-generation AI training clusters beyond 2026. But until power efficiency, ecosystem maturity, and cost curves align, 800G remains the most practical backbone for AI training clusters worldwide.

FAQ

1. Why is 800G more popular than 1.6T in AI training clusters?

Because 800G offers a better balance of performance, power efficiency, maturity, and cost.

2. Is 1.6T technically superior to 800G?

Yes in raw bandwidth, but not yet in deployment readiness for large AI training clusters.

3. Does 800G limit AI model training performance?

No. For current distributed training architectures, 800G provides sufficient bandwidth when properly scaled.

4. When will 1.6T become mainstream?

Likely after switching silicon, optics, and cooling systems fully mature — beyond 2026.

5. What does ESOPTIC offer for AI training clusters?

ESOPTIC provides stable, high-density 800G optical modules, AOC, and DAC solutions optimized for AI training cluster deployment.